Apache Hive merupakan project data warehouse dan analytics yang dibangun di atas platform Apache Hadoop. Tools ini awalnya dikembangkan oleh developer Facebook yang menilai cara akses/query data yang disimpan di platform Hadoop terlalu susah karena masih harus menggunakan konsep Map and Reduce yang implementasinya menggunakan programming Java. Untuk itu sebagai solusinya mereka menciptakan sebuah interface yang memungkinkan untuk melakukan retrieval dan manipulasi data menggunakan bahasa yang serupa dengan syntax SQL.

Pada tutorial sebelumnya kita sudah melakukan install apache hadoop multi cluster pada server CentOS 7, tutorial kali ini membahas cara menghubungkan apache hive dengan hadoop cluster yang sudah kita buat.

Download Hive Source

$ wget https://www-us.apache.org/dist/hive/hive-3.1.2/apache-hive-3.1.2-bin.tar.gz $ tar -zxvf apache-hive-3.1.2-bin.tar.gz $ sudo mv apache-hive-3.1.2-bin /usr/local/hive

Setup Environment Variable

$ su - hadoop

$ vi ~/.bashrc

# Sesuaikan dengan teks di bawah ini

# Java

export JAVA_HOME=$(dirname $(dirname $(readlink $(readlink $(which javac)))))

# Hadoop

export HADOOP_HOME=/usr/local/hadoop

export HADOOP_INSTALL=$HADOOP_HOME

export HADOOP_MAPRED_HOME=$HADOOP_HOME

export HADOOP_COMMON_HOME=$HADOOP_HOME

export HADOOP_HDFS_HOME=$HADOOP_HOME

export HADOOP_COMMON_LIB_NATIVE_DIR=$HADOOP_HOME/lib/native

export HADOOP_OPTS="-Djava.library.path=$HADOOP_COMMON_LIB_NATIVE_DIR"

export HADOOP_CLASSPATH=${JAVA_HOME}/lib/tools.jar

# Yarn

export YARN_HOME=$HADOOP_HOME

# Hive

HIVE_HOME=/usr/local/hive

HIVE_CONF_DIR=/usr/local/hive/conf

# Classpath

export CLASSPATH=.:$JAVA_HOME/jre/lib:$JAVA_HOME/lib:$JAVA_HOME/lib/tools.jar:$HADOOP_HOME/lib/*:$HIVE_HOME/lib/*

# Path

export PATH=$PATH:$HADOOP_HOME/sbin:$HADOOP_HOME/bin:$JAVA_HOME/bin:$HIVE_HOME/bin

Konfigurasi File hive-env.sh

$ cd $HIVE_CONF_DIR $ sudo cp hive-env.sh.template hive-env.sh $ sudo chmod +x hive-env.sh $ sudo vi hive-env.sh # tambahkan pada akhir baris HIVE_HOME=/usr/local/hive HIVE_CONF_DIR=/usr/local/hive/conf

Install MySQL dan JDBC MySQL Connector (Hive Metastore)

$ sudo yum install mariadb-server $ sudo systemctl enable mariadb $ sudo systemctl start mariadb $ sudo mysql_secure_installation $ wget http://dev.mysql.com/get/Downloads/Connector-J/mysql-connector-java-5.1.30.tar.gz $ tar -zxvf mysql-connector-java-5.1.30.tar.gz $ sudo cp mysql-connector-java-5.1.30/mysql-connector-java-5.1.30-bin.jar $HIVE_HOME/lib/ $ sudo chmod +x $HIVE_HOME/lib/mysql-connector-java-5.1.30-bin.jar

Buat database MySQL dengan nama metastore untuk Hive (initSchema)

$ mysql -u root -p

CREATE DATABASE metastore; USE metastore; SOURCE /usr/local/hive/scripts/metastore/upgrade/mysql/hive-schema-3.1.0.mysql.sql; CREATE USER 'hive'@localhost IDENTIFIED BY ''; GRANT ALL ON *.* TO 'hive'@localhost; FLUSH PRIVILEGES;

Konfigurasi File hive-site.xml

$ cp $HIVE_CONF_DIR/hive-default.xml.template $HIVE_CONF_DIR/hive-site.xml

$ vi $HIVE_CONF_DIR/hive-site.xml

# hive-site.xml

<property>

<name>hive.exec.local.scratchdir</name>

<value>/tmp/hive_temp</value>

<description>Local scratch space for Hive jobs</description>

</property>

<property>

<name>hive.execution.engine</name>

<value>mr</value>

<description>

Expects one of [mr, tez, spark].

Chooses execution engine. Options are: mr (Map reduce, default)</description>

</property>

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://localhost/metastore?createDatabaseIfNotExist=true&useSSL=false</value>

<description>metadata is stored in a MySQL server</description>

</property>

<property>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>com.mysql.jdbc.Driver</value>

<description>Driver class name for a JDBC metastore</description>

</property>

<property>

<name>javax.jdo.option.ConnectionUserName</name>

<value>hive</value>

<description>Username to use against metastore database</description>

</property>

<property>

<name>javax.jdo.option.ConnectionPassword</name>

<value>***</value>

<description>password to use against metastore database</description>

</property>

<property>

<name>hive.exec.scratchdir</name>

<value>/tmp/hive_tmp</value>

<description>HDFS root scratch dir for Hive jobs which gets created with write all (733) permission</description>

</property>

Install HBase

$ cd ~/

$ wget http://apache.mirror.gtcomm.net/hbase/stable/hbase-2.2.3-bin.tar.gz

$ tar -zxvf hbase-2.2.3-bin.tar.gz

$ sudo mv hbase-2.2.3-bin /usr/local/hbase

$ sudo chown -R hadoop:hadoop /usr/local/hbase

$ sudo chmod -R g+rwx /usr/local/hbase

$ vi ~/.bashrc

# tambahkan

export HBASE_HOME=/usr/local/hbase

export PATH=$PATH:$HADOOP_HOME/sbin:$HADOOP_HOME/bin:$JAVA_HOME/bin:$HIVE_HOME/bin:$HBASE_HOME/bin

$ source ~/.bashrc

$ cd $HBASE_HOME/conf

$ vi hbase-env.sh

# atur JAVA_HOME

export JAVA_HOME=/usr/lib/jvm/java-1.8.0-openjdk-1.8.0.242.b08-0.el7_7.x86_64/

$ vi hbase-site.xml

# tambahkan

<configuration>

<property>

<name>hbase.rootdir</name>

<value>hdfs://localhost:8030/hbase</value>

</property>

<property>

<name>hbase.zookeeper.property.dataDir</name>

<value>/hadoop/zookeeper</value>

</property>

<property>

<name>hbase.cluster.distributed</name>

<value>true</value>

</property>

</configuration>

$ sudo mkdir -p /hadoop/zookeeper

$ sudo chown -R hadoop:hadoop /hadoop/

$ start-hbase.sh

Buat direktori database hive di HDFS

$ hdfs dfs -mkdir /user/ $ hdfs dfs -mkdir /user/hive $ hdfs dfs -mkdir /user/hive/warehouse $ hdfs dfs -mkdir /tmp $ hdfs dfs -chmod -R a+rwx /user/hive/warehouse $ hdfs dfs -chmod g+w /tmp

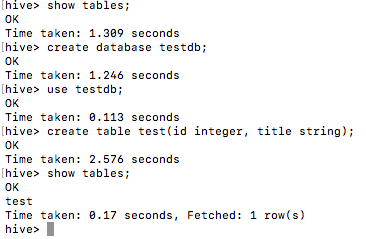

Test Hive Console

Running Metastore dan Hive as Daemon (Background)

$ mkdir $HIVE_HOME/logs $ hive --service metastore --hiveconf hive.log.dir=$HIVE_HOME/logs --hiveconf hive.log.file=metastore.log >/dev/null 2>&1 & $ hive --service hiveserver2 --hiveconf hive.log.dir=$HIVE_HOME/logs --hiveconf hive.log.file=hs2.log >/dev/null 2>&1 &

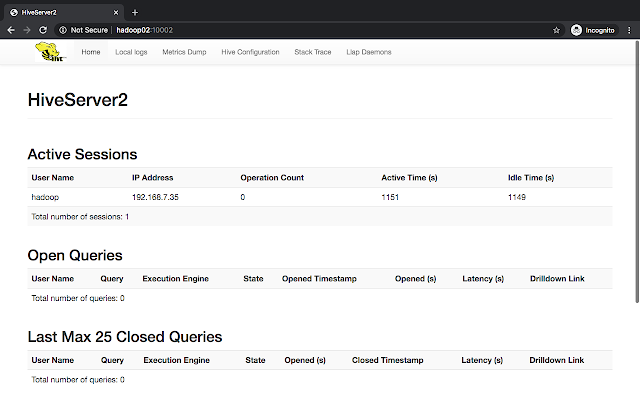

Testing Hive Web UI

$ hiveserver2 Akses ke browser http://hadoop02:10002

|

| HiveServer2 Web UI |

0 Komentar